The Potential Implications of Deepseek for Certain Industries

A Nuanced Take

As I shared in the last News of The Week, a Chinese AI start-up called Deepseek recently launched an AI model that was up there in terms of performance with the latest US-based AI models. The catch? Deepseek claimed to have only invested $6 million in training theirs. This contrasts significantly with the investments made by companies like OpenAI or Anthropic to develop their models (Anthropic claimed that their current model costs somewhere around $100 million to $1 billion to train). So, the debate in the financial industry seemed to gravitate around the Chinese being able to develop something similar at a fraction of the cost, not about the performance per se (which seems comparable).

The launch of Deepseek’s model kickstarted a bunch of speculation around the cost part of the equation. Deepseek disclosed that the $6 million figure ignored certain relevant costs (so it’s an adjusted figure), and several industry sources claim that the real cost was significantly higher and that it was achieved using Nvidia GPUs (H100), which are theoretically banned by current export restrictions. While it seems quite unbelievable that China managed to leapfrog the US so significantly in cost in such a short period, and China has an incentive to lie about their developments (so the US thinks that export restrictions are not bearing fruit), I am assuming that both the cost and performance parts of the equations are accurate to understand the long-term implications of this development in some related industries. Full disclosure here, I don’t consider myself the most knowledgeable on the topic (and don’t think many people can claim they are), but the goal here is to offer a nuanced take on what I believe this might mean over the long-term.

As you might have already seen, anything that has to do with “AI” was selling off quite materially on Monday, from hyperscalers (Amazon, Google, Microsoft) to semiconductors to Big Tech in general. As indices in the US are heavily exposed to these companies, the indices were also significantly down in pre-market trading (concentration hits both ways).

The first thing that sort of surprised me was to see both semis and hyperscalers down significantly. Investments into AI infrastructure mean revenue for semis but expenses for hyperscalers, so I honestly have a tough time envisioning how it would be bad for both at the same time. If the cost of developing AI is much lower than previously envisioned, the Capex intensity of the hyperscalers should theoretically drop, making them more cash-generative in the future (I don’t think this is a differentiated view at this point, to be honest). In short, they would need to pay less money to Nvidia and other infrastructure providers. I don’t think the demand side of their equation would be very compromised because these models are run where the data is, and the data currently lies in the cloud (with no visible alternative). I am also skeptical that many companies would be willing to use their data to train a China-based AI model.

The above potentially means that AI revenue generated by semiconductor companies should decrease (or decelerate) going forward. I don’t think, however, that the ramifications here are as direct as many people believe they are. Many people are claiming that a lower cost to develop AI will lead to less investments in AI and, therefore, less revenue for all semiconductor-related companies. While this might be true over the short to medium term, I believe it misses the point long-term.

The semiconductor industry has historically relied on the costs of technology development decreasing (not increasing). The rationale here is that lower costs to develop technology end up leading to more technological innovation and faster and more widespread adoption, which ultimately results in a higher volume of chips required (which is the main KPI for much of the industry). Peter Wennink, ASML’s CEO, explained this virtuous cycle on the company’s 2022 capital markets day:

And it's still a challenge today to keep connecting all the dots, but it's the value of Moore's Law, which basically reducing the cost per function, Yes. That will drive our business and will create these building blocks for growth and for solving some of humanity's biggest challenges, and we are a strong believer in this. And Moore’s Law is alive. It's still alive and kicking, And it's about the cost per function.

And we all know if the chip gives you more functionality, more value, then it decreases cost. We're going to create applications and solutions, and that's happening. I mean, things are actually happening across the globe.

If the costs of developing AI come down significantly, then (in theory) AI should proliferate faster and drive more technological innovation, which should eventually lead to more semiconductor content. This is what my gut tells me should be the long-term implications, but it might be very different over the short term of course. If you give humans more means to innovate, what has happened throughout history is that they innovate more (not less).

It’s also worth understanding which companies have current AI revenue at risk and which don’t. Quite a few companies within semicap have not seen Al tailwinds hit their valuations or their fundamentals yet due to the inherent lag in supply. As Christophe Fouquet mentioned in the last earnings call (I’m paraphrasing): “If it were not for AI, the industry in general would be sad.” ASML was indeed been sad because it had not experienced the heightened demand brought by AI yet. Much of these benefits have accrued to certain players in the industry like TSMC and Nvidia.

I have never researched TSMC and Nvidia in-depth, but they have been the first companies to benefit from rising investments in AI infrastructure. This makes me think that if investments decrease over the short term, they should also be the first companies to suffer from it. Don’t get me wrong, this lower demand would eventually spill to the supply chain (through the bullwhip effect), but much of the supply chain had yet to see heightened demand for AI anyway, so it would mostly be as if nothing happened (to the fundamentals, not to the stock price).

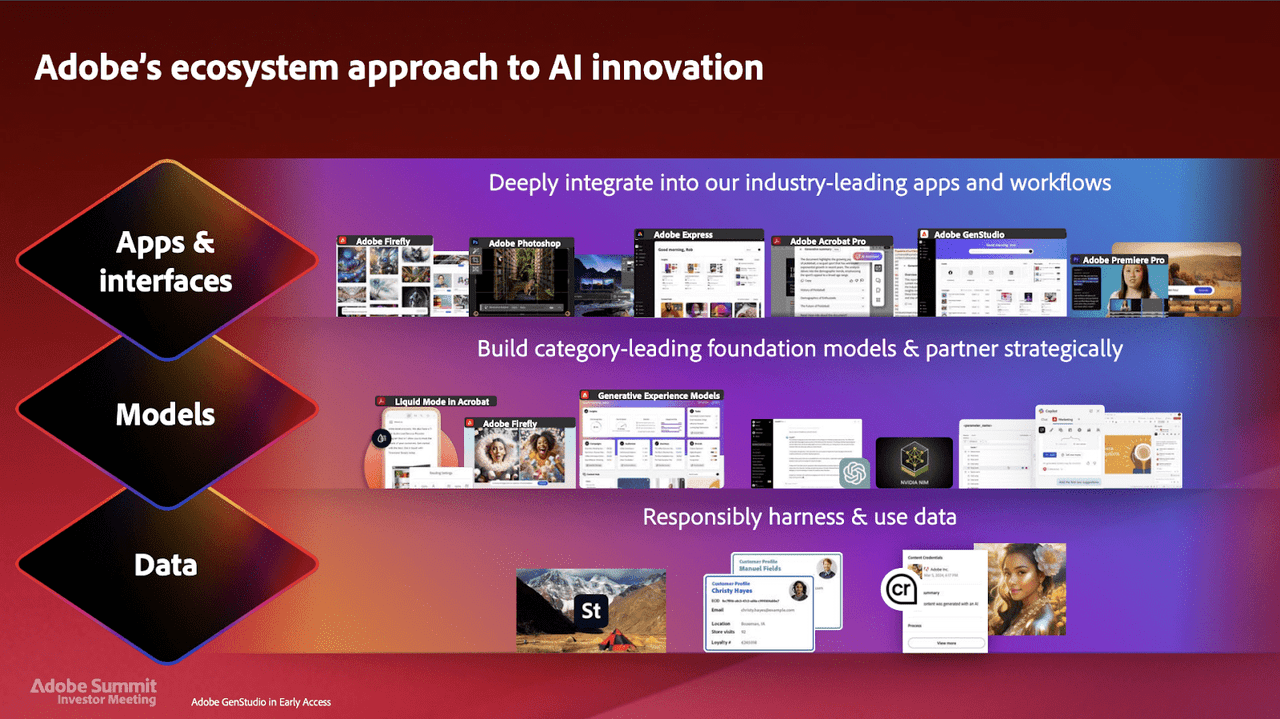

What seems pretty clear, regardless of the veracity that one wants to give to the cost part of the equation, is that LLMs seem to be in a race to the bottom (i.e., they are going to be commoditized). This is not something that we haven’t heard before, as many software companies have been saying for the past months: what matters is not the model per se but the differentiation in other layers. Adobe shared not long ago a model which had three layers, claiming that true differentiation would happen in the "Apps and interfaces layer:”

This is also the reason why Adobe is not afraid to allow the use of external models across its apps. The company believes that this commoditization and lower cost will lead to more content generation, which will ultimately benefit them through the interfaces (editing and distribution).

We are still very early in the AI arms race, and there’s no denying that having a strong opinion today seems a stretch, more so as I don’t consider myself an expert on the topic (I don’t think many people are considering how early we are into AI). The goal of this article was to share my nuanced take on the topic, trying to understand what it might mean long-term for some of my holdings. ASML reports earnings on Wednesday, so I am sure the management team will get asked about this and will set the tone for other earnings releases.

Have a great day,

Leandro

This seems like a reasonable take. I’m skeptical they have achieved what they claim. If they have I’d expect a serious bust in AI chips. If OpenAI can do 100 times as much with the chips they already have they aren’t buying a ton more anytime soon. That would make it the latest game changer technology to suffer a bust eg railroads, and fiber.

100% agree longer term cheaper AI means many more use cases. Its a simple fact that when something is 2 orders of magnitude cheaper you will find more use cases with an acceptable ROI.

This is awesome for humanity in the long run if true.

Great article! I still have doubts about the real cost of this AI - it’s hard to believe it was only $5mm. If that’s really the case, it’ll become a commodity and spread even faster…